Until now, third party devices (NVAs) have been required to have a “real” firewall in Azure in addition to the basic functionality provided by Network Security Groups (NSG’s). Yesterday however, Microsoft released their stateful firewall service in Azure in public preview, simply named Azure Firewall.

Does this mean the end for third-party appliances with firewall functionality in Azure? In this post I will try to answer what scenarios Azure’s new firewall covers in this preview and how to get started and try it out in your environment.

The Basics

First of all, it is important to understand that the firewall in this preview is only intended to be used to handle outbound traffic to the Internet. If you want to protect your resources that are published to the internet, you can use for example Application Gateway with WAF and other similar services.

Below capabilities are available in the public preview:

- Outbound FQDN Filtering

- FQDN filtering is one of the most common asks that customers have for outbound filtering to the Internet, as it becomes very difficult to maintain lists of IP addresses/subnet for internet-based services. Only for http/https traffic at this time.

- Network traffic filtering rules

- Traditional access lists with the ability to control traffic on protocol/ip /subnet/port

- Outbound SNAT Support

- Obviously something that needs to be supported to handle outbound traffic in a centralized way 🙂

- Azure Monitor logging

- Logging is one of the most important parts when talking about security in general. All new Azure service use Azure Monitor for logging, Azure Firewall is no exception. Accepted and denied connections based on network and application rules are logged.

As you can see, there are quite sparse capabilities in the preview, but as with all other services, I expect more and more features to come over time.

Sizing and cost

Unlike Application Gateway / WAF, where you need to select the number of instances and instance sizes, Azure Firewall is highly available by default and scales automatically based on demand.

NO MORE MANUAL SCALING!!! 🙂

Getting started

Prerequisites

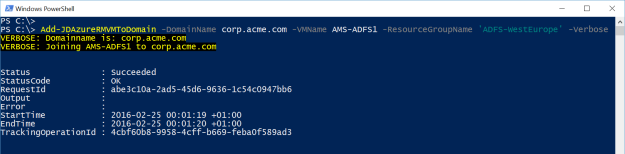

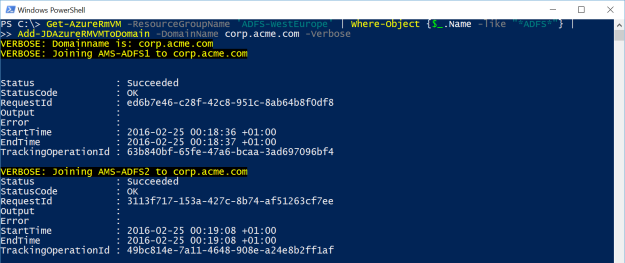

- Since it is in preview, you first have to enable the Azure Firewall public preview by registering the feature with the following PowerShell commands. It will take up to 30 minutes for feature registration to complete

Register-AzureRmProviderFeature -FeatureName AllowRegionalGatewayManagerForSecureGateway -ProviderNamespace Microsoft.Network

Register-AzureRmProviderFeature -FeatureName AllowAzureFirewall -ProviderNamespace Microsoft.Network

-

- The firewall needs one dedicated subnet, minimum /25 . It is a fairly large subnet, but suppose it’s because it needs address space to scale out without any restrictions. It isn’t very clear in the documentation and it will not complain about this until you actually deploy the firewall. The subnet must be named AzureFirewallSubnet.

Deploying the firewall

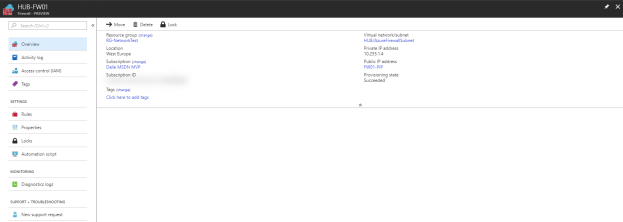

The firewall service integrates nicely directly in the VNet settings context and it i just to fire off a deployment directly from there.

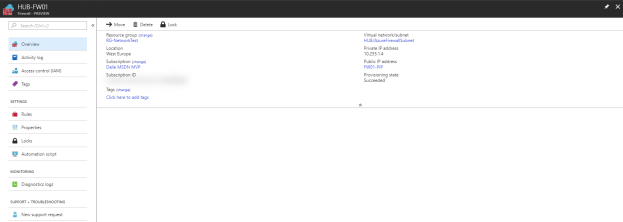

As seen above, except for the firewall subnet and the standard SKU public IP address, there are very few things that can be configured during deployment.

As seen above, except for the firewall subnet and the standard SKU public IP address, there are very few things that can be configured during deployment.

It only took 5-6 minutes to deploy the firewall which is significantly faster than my experience is with eg. Application Gateways and VPN Gateways. Good for us stressed consultants 🙂

Putting it to the test

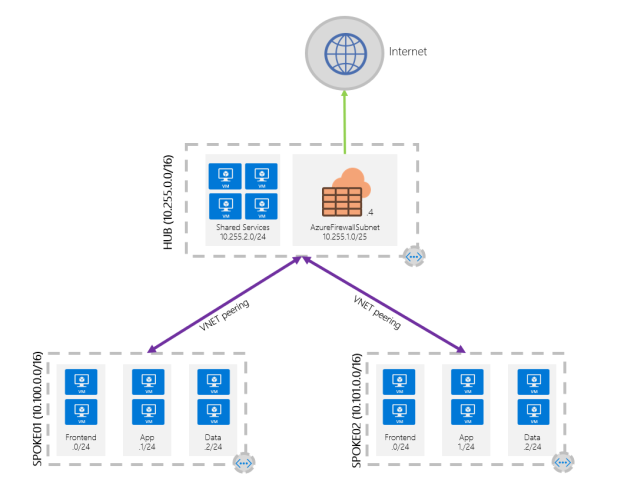

User Defined Routes

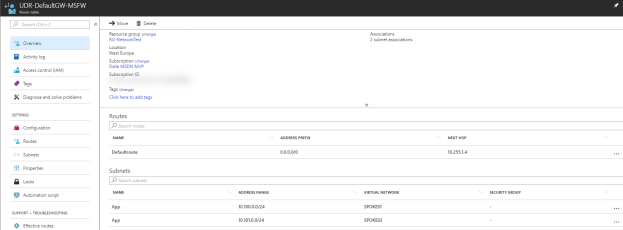

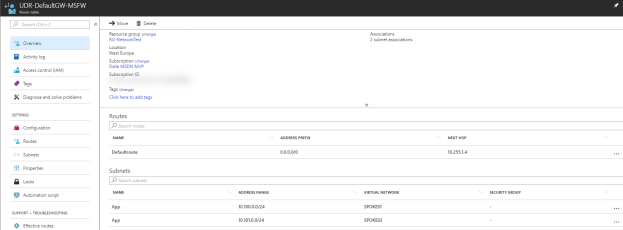

To route all outbound internet traffic from the spokes via the hub through Azure Firewall, I first created a User Defined Route (UDR) for 0.0.0.0/0 and associated the spoke subnets to that. Depending on how your traffic flow, you might want another configuration.

Note that until you have created your default rules in the firewalls, the machines in these subnets will not be able to access anything.

Management experience – creating basic rules

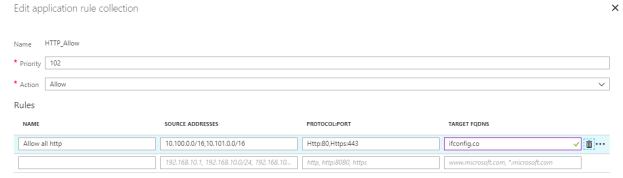

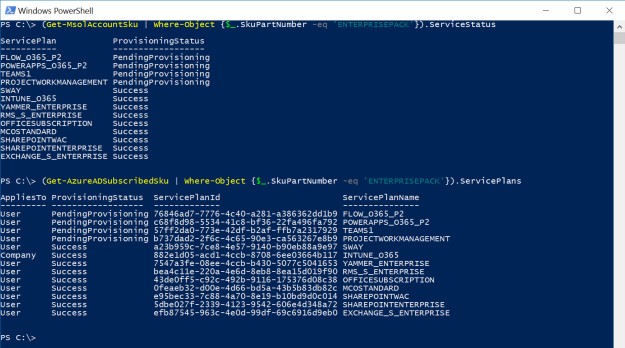

Application and network rules are stored in something called rule collections. A rule collection is a list of rules that share the same action and priority. A network rule collection is a list of network rules and an application rule collection is a list of application rules. Network rule collections are always processed before application rule collections. All rules are terminating, so if a match is found in a network rule collection, the following application rule collections for the session are not be processed.

Except from the built-in collection for infrastructure FQDNs used for Compute, Storage and Diagnostics that are allowed by default, all connections are denied by default, so we are really in full control of our outbound traffic here.

The rule management experience is fairly basic but works well. It takes up to a minute to create/edit a rule, much better then Application Gateway but might still be a bit frustrating if making a lot of changes in the GUI. The other thing I feel is missing is the possibility to create host groups for subnet or vm/host and apply the rules to those instead of ip’s/subnets. That would make it a lot easier to get an overview of whats allowed in a specific rule.

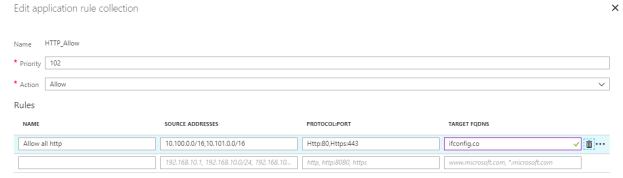

I created one default network rule collection and one application rule to only allow SSH, DNS and HTTP/S traffic to ifconfig.co from my spoke VNets.

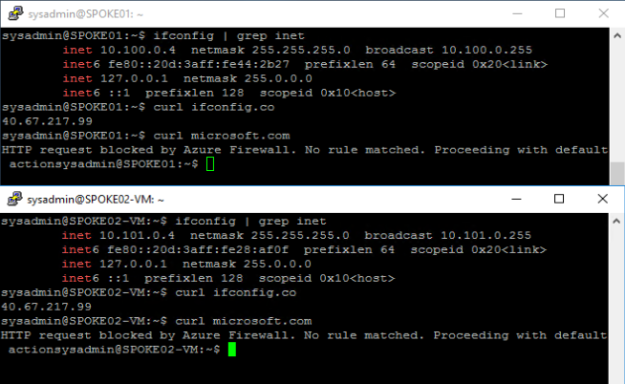

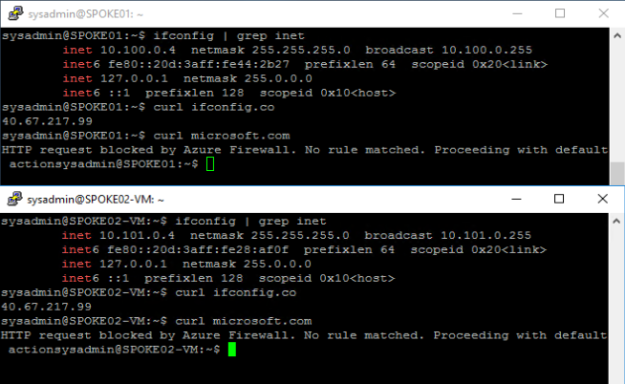

As seen in the screenshot below, both machines used the same external ip address when browsing the internet. You can also see that ifconfig.co was allowed but microsoft.com was denied with the message “HTTP request blocked by Azure Firewall. No rule matched. Proceeding with default actions”.

Spoke to spoke communication

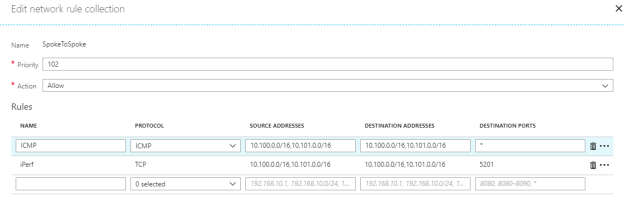

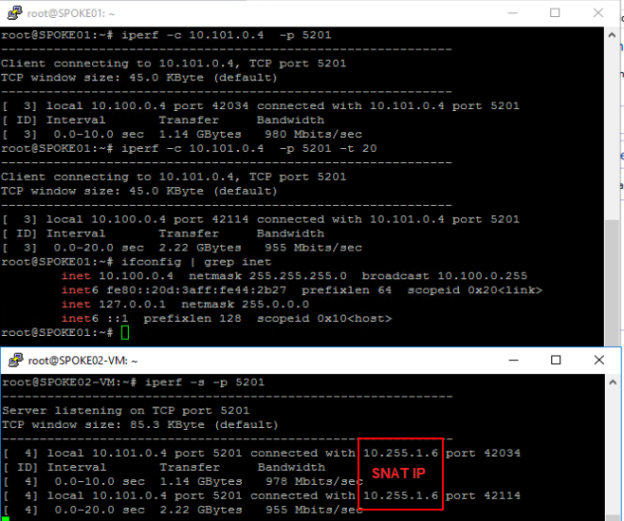

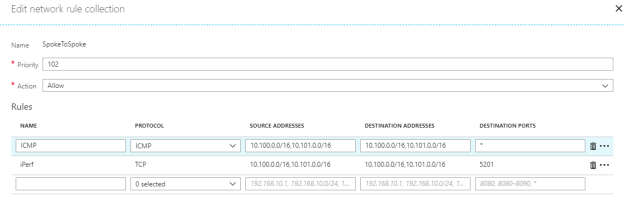

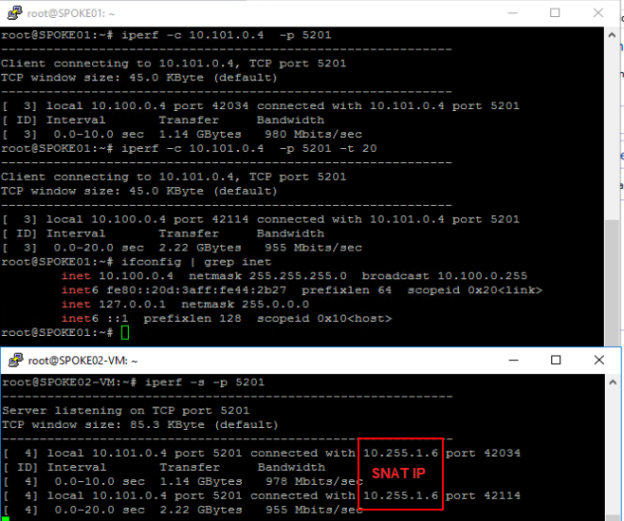

Another common scenario commonly handled by an NVA is spoke two spoke traffic. Obviously it is also possible to solve this with VNet peering, but by routing the traffic through the hub we get a much better and more granular control. To try out this scenario I created an additional rule collection allowing ICMP and TCP 5201 (iPerf) between the subnets and it worked well. It is still doing SNAT and not routing so the connections will come from the firewall and not from the true source ip. I could also see that the connections sometimes came from different ip’s (different load balanced firewall nodes).

Latency became slightly more impacted than I expected routing the traffic through the hub, but generally something that is possible to live with.

Pushing the limits – throughput test

Since it also supposed to unlimited capacity based on demand, I also took the opportunity to do a basic throughput test between the hosts in my environment. Between my lightweight B2s machines, I almost got 1Gbit/s which I consider to be more than acceptable. Your mileage may vary depending on the amount of connections etc. but it looks really promising.

Conclusion

Even though it is currently a minimal viable product and a first public preview of the service, I have to say that overall, I am pleasantly surprised at how well the firewall actually performed. Obviously, some polishing is required in user interfaces, logging and additional capabilities before it is a full-fledged cloud-native firewall, but it definitely has potential. Definitely a good complement and sometimes even a replacement of the traditional NVAs.

Just ping me on Twitter if you have questions or comments!

/Johan